DeepSeek R1 vs ChatGpt o1: Introducing Processor O1 and DeepSeek R1

Two Distinct Paths in AI

Introduction

The rapid rise of large language models (LLMs) has led to a proliferation of AI solutions, each with its own strengths and intended use cases. From general-purpose conversational AI to specialized retrieval systems, there is no “one-size-fits-all” approach. Two models often mentioned — though not always widely documented — are:

- Processor O1 (commonly linked to an early version of ChatGPT or a GPT-like system)

- DeepSeek R1 (a specialized retrieval and reasoning engine)

In this blog, we’ll explore how each model’s “processor” or “core engine” underpins its functionality. We’ll look at their architectural design, specialized components, performance trade-offs, and ideal usage scenarios.

1. Understanding the Core “Processor”

When we talk about a “processor” or “engine” in the context of LLMs, we’re referring to the core computational architecture of these models:

- The underlying neural network layers (Transformers, attention heads, feed-forward blocks).

- The approach to training and fine-tuning.

- The computational pipeline from input tokens to output predictions.

Both Processor O1 and DeepSeek R1 rely on Transformer-based designs; however, each implements nuanced differences to address its target functionalities.

2. Processor O1 (ChatGPT O1) — The Conversational Core

2.1 Architectural Overview

Processor O1 is believed to be an early iteration of the ChatGPT line, which is itself based on GPT-like Transformer architectures. At its core, the “O1” model:

- Uses multi-head attention mechanisms to model relationships between words/tokens in a sequence.

- Often has a decoder-only architecture designed for causal language modeling (predicting the next word/token in a sequence).

- Is typically pre-trained on a massive corpus spanning websites, books, and other publicly available text, giving it broad coverage of topics.

Although the “O1” version might not be as large as subsequent GPT-3.5 or GPT-4 scale models, it still operates with billions of parameters, making it capable of fluent text generation.

2.2 Conversational Fine-Tuning

A hallmark of any ChatGPT-style system is its dialogue fine-tuning:

- Instruction Tuning: The model is refined using human-labeled prompts and responses to align with user queries.

- Reinforcement Learning from Human Feedback (RLHF): Early prototypes, including O1, may use smaller RLHF datasets compared to more modern ChatGPT versions, but the principle remains the same — teaching the model to produce helpful, safe, and context-aware responses.

2.3 Processor O1 Strengths

- Broad Coverage: Extensive pre-training ensures that it can address a wide range of general knowledge queries.

- Natural Conversation: Thanks to its fine-tuning, it can maintain context and produce human-like interactions.

- Flexible Use Cases: Useful for everything from brainstorming to drafting emails or short articles.

2.4 Processor O1 Limitations

- Potential Hallucinations: Like most early GPT-based models, it may generate inaccurate or off-topic responses.

- Less Specialized: Not specifically tailored for deep retrieval on enterprise or proprietary data sources.

- Hardware Requirements: While smaller than cutting-edge GPT-4 models, running O1 locally (if that’s even offered) still demands powerful GPU resources for inference at scale.

3. DeepSeek R1 — The Retrieval & Reasoning Engine

3.1 Architectural Overview

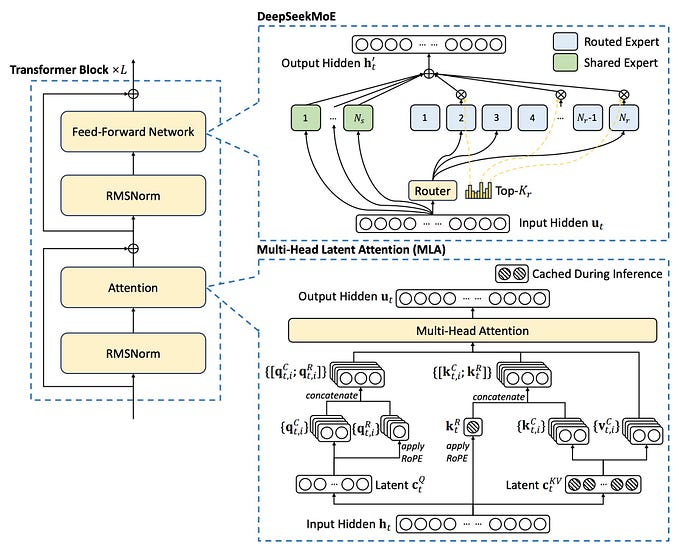

DeepSeek R1 often markets itself as a retrieval-augmented language model, leveraging a specialized pipeline:

- Retriever Module: A subsystem that queries a vector database or index to locate relevant documents based on the user’s prompt or question.

- Reasoning Module: A specialized Transformer block that takes the retrieved context and applies advanced reasoning to produce answers.

The “R1” typically denotes the first major release or version of DeepSeek, meaning it may still be in the process of refining certain features, but it sets the foundation for future expansions.

3.2 Retrieval-Augmented Generation

Unlike a general-purpose LLM (which relies primarily on its internal, static knowledge learned during pre-training), DeepSeek R1 uses a hybrid approach:

- Context Injection: When a query arrives, the retriever locates the most relevant passages from external databases. These passages are “injected” into the model’s attention mechanism, providing timely and domain-specific data.

- Contextual Reasoning: The R1 engine then processes both the user prompt and the retrieved data to produce a more factually grounded response.

This design can significantly reduce hallucinations (since the system references actual documents), and it enables domain specialization — for instance, searching medical literature, legal precedents, or internal enterprise knowledge bases.

3.3 DeepSeek R1 Strengths

- Accurate & Up-to-Date: If the retrieval index is refreshed frequently, R1’s answers can reflect real-time or recent information.

- Domain-Specific Mastery: By connecting to custom or proprietary databases, R1 can become an expert on specific content.

- Interpretability: Some implementations let users see which documents were retrieved, lending more transparency to the reasoning.

3.4 DeepSeek R1 Limitations

- Complex Setup: Requires building and maintaining a retrieval infrastructure (e.g., vector databases).

- Less “Chatty”: While R1 can handle multi-turn Q&A, it’s not primarily optimized for open-ended, creative dialogue like ChatGPT.

- Early Release Quirks: Being a first major release (“R1”), certain UI/UX or advanced features might still be in beta.

4. Processor Performance and Hardware Considerations

An often-overlooked aspect when evaluating AI models is hardware requirements. While both Processor O1 (ChatGPT O1) and DeepSeek R1 rely on Transformer architectures, they approach inference differently.

- Processor O1:

- A self-contained model that loads its entire neural network weights into memory.

- In a cloud environment, usage is typically measured by tokens processed, with the heavy lifting done on high-end GPUs (e.g., NVIDIA A100s, T4s, or similar).

- Local Deployment (if offered) requires a capable GPU to run inference in real-time.

2. DeepSeek R1:

- Incorporates an additional retriever that must be maintained — this is often a separate vector store powered by CPUs or GPUs, depending on the scale.

- The core language model itself could be similarly large, but part of the load can be distributed between the indexing (retrieval) process and the inference engine.

- Latency can be slightly higher because retrieval calls introduce extra steps in the pipeline, though improved indexing strategies can mitigate this.

Hardware Optimizations

- Quantization & Pruning: Both models can benefit from techniques that reduce precision (FP32 → FP16 or INT8) to speed up inference.

- Distributed Setups: For enterprise-level solutions, multi-GPU or multi-server deployments can handle higher throughput and serve more concurrent users.

5. Real-World Use Cases

Processor O1 (ChatGPT O1)

- Customer Support Bots: Quick, broad-based answers to common user queries.

- Creative Writing & Brainstorming: Generating short stories, plot ideas, or marketing copy.

- Educational Assistance: Explaining concepts in plain language, with the caveat of potential inaccuracies if the content is outside the model’s training distribution.

DeepSeek R1

- Enterprise Knowledge Bases: Searching internal documents, policies, or catalogs, then generating specific answers.

- Legal & Compliance: Retrieving relevant clauses or case law and crafting summarized outcomes.

- Scientific/Technical Research: Searching large academic or technical databases, enabling domain-expert question-answering.

6. Points of Differentiation at a Glance

To summarize the distinctions in a more direct, bullet-point form:

Retrieval:

- Processor O1 (ChatGPT O1): Primarily relies on internal, pre-trained knowledge. Retrieval only if external plugins or advanced integrations are used.

- DeepSeek R1: Built with retrieval at the core; excelling when fresh or specialized data is critical.

Conversational vs. Structured:

- Processor O1: Tailored for conversational flows, more “open-ended.”

- DeepSeek R1: Offers structured Q&A flows, focusing on accuracy and direct references to found data.

Ease of Deployment:

- Processor O1: Straightforward cloud-based usage (often via an API with usage-based fees).

- DeepSeek R1: Requires an integrated search index + model, so more setup but potentially more specialized results.

Scalability & Performance:

- Processor O1: Scales easily via standard GPT cloud infrastructure.

- DeepSeek R1: Scales well for retrieval tasks but demands a robust indexing and retrieval infrastructure.

Conclusion

Both Processor O1 (ChatGPT O1) and DeepSeek R1 represent two different approaches to next-generation AI:

- Processor O1: Prioritizes fluid, human-like conversation and broad knowledge coverage. Its core processor is a GPT-style Transformer tuned for chat interactions, making it immediately accessible and versatile for general tasks.

- DeepSeek R1: Puts retrieval-augmented generation at its heart, setting itself up as the go-to solution when up-to-date, domain-specific, or enterprise-level accuracy is paramount.

For more info please connect & Follow me:

Github: https://github.com/manikcloud

LinkedIn: https://www.linkedin.com/in/vkmanik/

Email: varunmanik1@gmail.com

Facebook: https://www.facebook.com/cloudvirtualization/

YouTube: https://bit.ly/32fknRN

Twitter: https://twitter.com/varunkmanik